Artificial Intelligence and Machine Learning: Unit V: Neural Networks

Gradient Descent Optimization

Neural Networks - Artificial Intelligence and Machine Learning

Gradient Descent is an optimization algorithm in gadget mastering used to limit a feature with the aid iteratively moving towards the minimal fee of 1979 the characteristic.

Gradient

Descent Optimization

•

Gradient Descent is an optimization algorithm in

gadget mastering used to limit a feature with the aid iteratively moving

towards the minimal fee of the characteristic.

• We essentially use this algorithm when we have to locate

the least possible values which could fulfill a given fee function. In gadget

getting to know, greater regularly that not we try to limit loss features (like

Mean Squared Error). By minimizing the loss characteristic, we will improve our

model and Gradient Descent is one of the most popular algorithms used for this

cause.

•

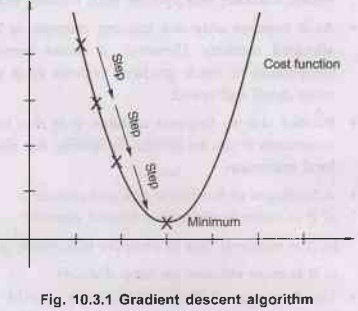

The graph above shows how exactly a Gradient Descent

set of rules works.

•

We first take a factor in the value function and

begin shifting in steps in the direction of the minimum factor. The size of

that step, or how quickly we ought to converge to the minimum factor is defined

by Learning Rate. We can cowl more location with better learning fee but at the

risk of overshooting the minima. On the opposite hand, small steps/smaller

gaining knowledge of charges will eat a number of time to attain the lowest

point.

•

Now, the direction wherein algorithm has to transport

(closer to minimal) is also important. We calculate this by way of using

derivatives. You need to be familiar with derivatives from calculus. A spin off

is largely calculated because the slope of anon the graph at any specific

factor. We get that with the aid of finding the tangent line to the graph at

that point. The extra steep the tangent, would suggest that more steps would be

needed to reach minimum point, much less steep might suggest lesser steps are

required to reach the minimum factor.

Stochastic Gradient Descent

•

The word 'stochastic' means a system or a process

that is linked with a random probability. Hence, in Stochastic Gradient

Descent, a few samples are selected randomly instead of the whole data set for

each iteration.

•

Stochastic Gradient Descent (SGD) is a type of

gradient descent that runs one training example per iteration. It processes a

training epoch for each example within a dataset and updates each training

example's parameters one at a time.

•

As it requires only one training example at a time,

hence it is easier to store in allocated memory. However, it shows some

computational efficiency losses in comparison to batch gradient systems as it

shows frequent updates that require more detail and speed.

•

Further, due to frequent updates, it is also treated

as a noisy gradient. However, sometimes it can be helpful in finding the global

minimum and also escaping the local minimum.

•

Advantages of Stochastic gradient descent:

a)

It is easier to allocate in desired memory.

b)

It is relatively fast to compute than batch gradient descent.

c) It

is more efficient for large datasets.

•

Disadvantages of Stochastic Gradient Descent:

a)

SGD requires a number of hyper parameters such as the regularization parameter

and the number of iterations.

b)

SGD is sensitive to feature scaling.

Artificial Intelligence and Machine Learning: Unit V: Neural Networks : Tag: : Neural Networks - Artificial Intelligence and Machine Learning - Gradient Descent Optimization

Related Topics

Related Subjects

Artificial Intelligence and Machine Learning

CS3491 4th Semester CSE/ECE Dept | 2021 Regulation | 4th Semester CSE/ECE Dept 2021 Regulation