Artificial Intelligence and Machine Learning: Unit III: Supervised Learning

Types of Learning

Supervised Learning - Artificial Intelligence and Machine Learning

Learning is essential for unknown environments, i.e. when designer lacks the 10 me omniscience. Learning simply means incorporating information from the training examples into the system.

Types

of Learning

•

Learning is essential for unknown environments, i.e.

when designer lacks the 10 me omniscience. Learning simply means incorporating

information from the training examples into the system.

•

Learning is any change in a system that allows it to

perform better the second time on repetition of the same task or on another

task drawn from the same population. One part of learning is acquiring knowledge

and new information; and the other part is problem-solving.

•

Supervised and Unsupervised Learning are the

different types of machine learning methods. A computational learning model

should be clear about the following aspects:

1.

Learner: Who or what is doing the learning. For

example: Program or algorithm.

2.

Domain: What is being learned?

3.

Goal: Why the learning is done?

4.

Representation: The way the objects to be

learned are represented.

5.

Algorithmic technology: The algorithmic

framework to be used.

6.

Information source: The information (training

data) the program uses for learning.

7.

Training scenario: The description of the

learning process.

Learning

is constructing or modifying representation of what is being experienced. Learn

means to get knowledge of by study, experience or being taught.

•

Machine learning is a scientific discipline concerned

with the design and development of the algorithm that allows computers to

evolve behaviors based on empirical data, such as form sensors data or database.

•

Machine learning is usually divided into two main

types: Supervised Learning and Unsupervised Learning.

Why

do Machine Learning?

1.

To understand and improve efficiency of human learning.

2.

Discover new things or structure that is unknown to humans (Example: Data

mining).

3.

Fill in skeletal or incomplete specifications about a domain.

Supervised Learning

•

Supervised learning is the machine learning task of

inferring a function from supervised training data. The training data consist

of a set of training examples. The task of the supervised learner is to predict

the output behavior of a system for any set of input values, after an initial

training phase.

•

Supervised learning in which the network is trained

by providing it with input and matching output patterns. These input-output

pairs are usually provided by an external teacher.

•

Human learning is based on the past experiences. A

computer does not have experiences.

•

A computer system learns from data, which represent

some "past experiences" of an application domain.

•

To learn a target function that can be used to

predict the values of a discrete class attribute, e.g., approve or not-approved

and high-risk or low risk. The task is commonly called: Supervised learning,

Classification or inductive learning.

•

Training data includes both the input and the desired

results. For some examples the correct results (targets) are known and are

given in input to the model during the learning process. The construction of a

proper training, validation and test set is crucial. These methods are usually

fast and accurate.

•

Have to be able to generalize: give the correct

results when new data are given in input without knowing a priori the target.

•

Supervised learning is the machine learning task of inferring

a function from supervised training data. The training data consist of a set of

training examples. In supervised learning, each example is a pair consisting of

an input object and a desired output value.

•

A supervised learning algorithm analyzes the training

data and produces an inferred function, which is called a classifier or a

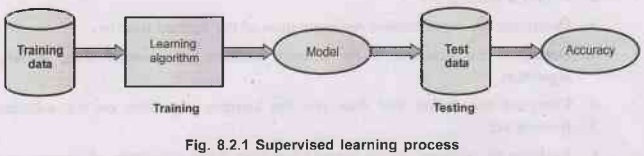

regression function. Fig. 8.2.1 shows supervised learning process.

•

The learned model helps the system to perform task

better as compared to no learning.

•

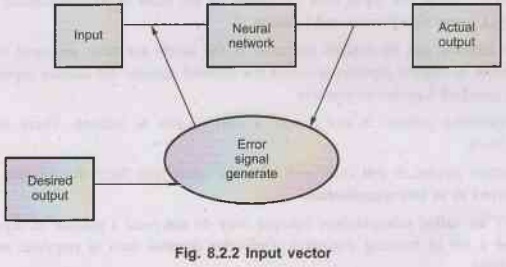

Each input vector requires a corresponding target

vector.

Training

Pair = (Input Vector, Target Vector)

•

Fig. 8.2.2 shows input vector.

•

Supervised learning denotes a method in which some

input vectors are collected and presented to the network. The output computed

by the net-work is observed and the deviation from the expected answer is

measured. The weights are corrected according to the magnitude of the error in

the way defined by the learning algorithm.

•

Supervised learning is further divided into methods

which use reinforcement or error correction. The perceptron learning algorithm

is an example of supervised learning with reinforcement.

In

order to solve a given problem of supervised learning, following steps are 1.8

performed:

1.

Find out the type of training examples.

2.

Collect a training set.

3.

Determine the input feature representation of the learned function.

4.

Determine the structure of the learned function and corresponding learning

algorithm.

5.

Complete the design and then run the learning algorithm on the collected

training set.

6.

Evaluate the accuracy of the learned function. After parameter adjustment and

learning, the performance of the resulting function should be measured on a

test set that is separate from the training set.

8.2.2

Unsupervised Learning

•

The model is not provided with the correct results

during the training. It can be used to cluster the input data in classes on the

basis of their statistical properties only. Cluster significance and labeling.

•

The labeling can be carried out even if the labels

are only available for a small number of objects representative of the desired

classes. All similar inputs patterns are grouped together as clusters.

•

If matching pattern is not found, a new cluster is

formed. There is no error feedback.

•

External teacher is not used and is based upon only

local information. It is also referred to as self-organization.

•

They are called unsupervised because they do not need

a teacher or super-visor to label a set of training examples. Only the original

data is required to start the analysis.

•

In contrast to supervised learning, unsupervised or

self-organized learning does not require an external teacher. During the

training session, the neural network boy receives a number of different input

patterns, discovers significant features in these patterns and learns how to

classify input data into appropriate categories.

•

Unsupervised learning algorithms aim to learn rapidly

and can be used in real-time. Unsupervised learning is frequently employed for

data clustering, feature extraction etc.

•

Another mode of learning called recording learning by

Zurada is typically employed for associative memory networks. An associative

memory networks is designed by recording several idea patterns into the

networks stable states.

Difference between Supervised and Unsupervised Learning

Semi-supervised Learning

•

Semi-supervised learning uses both labeled and

unlabeled data to improve supervised learning. The goal is to learn a predictor

that predicts future test data better than the predictor learned from the

labeled training data alone.

•

Semi-supervised learning is motivated by its

practical value in learning faster, better and cheaper.

In

many real world applications, it is relatively easy to acquire a large amount

of unlabeled data x.

•

For example, documents can be crawled from the Web,

images can be obtained from surveillance cameras, and speech can be collected

from broadcast. However, their corresponding labels y for the prediction task,

such as sentiment orientation, intrusion detection and phonetic transcript,

often requires slow human annotation and expensive laboratory experiments.

•

In many practical learning domains, there is a large

supply of unlabeled data but limited labeled data, which can be expensive to

generate. For example: text processing, video-indexing, bioinformatics etc.

•

Semi-supervised Learning makes use of both labeled

and unlabeled data for training, typically a small amount of labeled data with

a large amount of unlabeled data. When unlabeled data is used in conjunction

with a small amount of labeled data, it can produce considerable improvement in

learning accuracy.

•

Semi-supervised learning sometimes enables predictive

model testing at reduced cost.

•

Semi-supervised classification: Training on labeled data exploits additional unlabeled

data, frequently resulting in a more accurate classifier.

•

Semi-supervised clustering: Uses small amount of labeled data to aid and bias the clustering

of unlabeled data.

Reinforced Learnings

•

User will get immediate feedback in supervised

learning and no feedback from unsupervised learning. But in the reinforced

learning, you will get delayed scalar feedback.

•

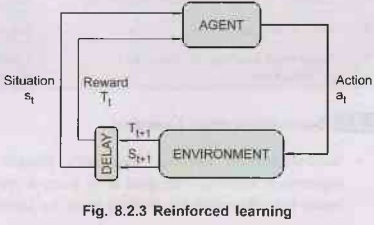

Reinforcement learning is learning what to do and how

to map situations to actions. The learner is not told which actions to take.

Fig. 8.2.3 shows concept of reinforced learning.

•

Reinforced learning is deals with agents that must sense

and act upon their environment. It combines classical Artificial Intelligence

and machine learning techniques.

•

It allows machines and software agents to

automatically determine the ideal behavior within a specific context, in order

to maximize its performance. Simple reward feedback is required for the agent

to learn its behavior; this is known as the reinforcement signal.

•

Two most important distinguishing features of

reinforcement learning is trial-and-error and delayed reward.

•

With reinforcement learning algorithms an agent can

improve its performance by using the feedback it gets from the environment.

This environmental feedback is and called the reward signal.

•

Based on accumulated experience, the agent needs to

learn which action to take in D as a given situation in order to obtain a

desired long term goal. Essentially actions that lead to long term rewards need

to reinforced. Reinforcement learning has connections with control theory,

Markov decision processes and game theory.

•

Example of reinforcement learning: A mobile robot

decides whether it should bow enter a new room in search of more trash to

collect or start trying to find its way air back to its battery recharging

station. It makes its decision based on how quickly and easily it has been able

to find the recharger in the past.

1. Elements of Reinforcement Learning

•

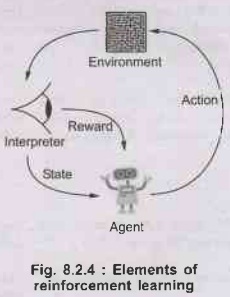

Reinforcement learning elements are as follows:

1.

Policy

2.

Reward function

3.

Value function

4.

Model of the environment

•

Fig. 8.2.4 shows elements of RL.

•

Policy:

Policy defines the learning agent behavior for given time period. It is a

mapping from perceived states environment to actions to be taken when in those

states.

•

Reward Function: Reward function is used to define a goal in a reinforcement learning

problem. It also maps each perceived state of the environment to a single

number.

• Value function: Value functions specify what is good in the long run. The value of a state is the total amount of reward an agent can expect to accumulate over the future, starting from that state.

•

Model of the environment: Models are used for planning.

•

Credit assignment problem: Reinforcement learning

algorithms learn to generate an internal value for the intermediate states as

to how good they are in leading to the goal.

•

The learning decision maker is called the agent. The

agent interacts with the environment that includes everything outside the

agent.

•

The agent has sensors to decide on its state in the

environment and takes an action that modifies its state.

•

The reinforcement learning problem model is an agent

continuously interacting with an environment. The agent and the environment

interact in a sequence of time steps. At each time step t, the agent receives

the state of the environment and a scalar numerical reward for the previous

action, and then the agent then selects an action.

•

Reinforcement Learning is a technique for solving

Markov decision problems.

•

Reinforcement learning uses a formal framework

defining the interaction between a learning agent and its environment in terms

of states, actions, and rewards. This framework is intended to be a simple way

of representing essential features of the artificial intelligence problem.

Difference between Supervised, Unsupervised and Reinforcement Learning

Artificial Intelligence and Machine Learning: Unit III: Supervised Learning : Tag: : Supervised Learning - Artificial Intelligence and Machine Learning - Types of Learning

Related Topics

Related Subjects

Artificial Intelligence and Machine Learning

CS3491 4th Semester CSE/ECE Dept | 2021 Regulation | 4th Semester CSE/ECE Dept 2021 Regulation