Introduction to Operating Systems: Unit II(a): Process Management

Two marks Questions with Answers

Process Management - Introduction to Operating Systems

A process is simply a program in execution. i.e. an instance of a program. execution. PCB maintains pointer, state, process number, CPU register, PC, memory allocation etc.

Two Marks Questions with Answers

Q.1

Define process. What is the information maintained in a PCB ?

Ans.

A process is simply a program in execution. i.e. an

instance of a program. execution. PCB maintains pointer, state, process number,

CPU register, PC, memory allocation etc.

Q.2

Define task control block.

Ans. TCB is also called PCB.

Q.3

What is PCB? Specify the information

maintained in it. AU CSE/IT: Dec.-12

Ans.

Each process is represented in the operating system

by a process control block. PCB contains information like process state,

program counter, CPU register, accounting information etc.

Q.4

What is independent process ?

Ans.

Independent process cannot affect or be affected by the execution of another

process.

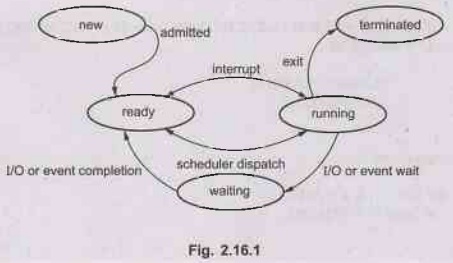

Q.5 Name and draw five different process states with proper definition. AU: Dec.-17

Ans.

Process states are new, running, waiting, ready and terminated. Fig. 2.16.1

shows process state diagram.

Q.6

Define context switching.

Ans. Switching the

CPU to another process requires saving the state of the old process and loading

the saved state for the new process. This task is known as context switch.

Q.7

What are the reasons for terminating execution of child process ?

Ans. Parent may

terminate execution of children processes via abort system call for a variety

of reasons, such as:

1.Child

has exceeded allocated resources.

2.

Task assigned to child is no longer required.

3.

Parent is exiting and the operating system does not allow a child to continue

if its parent terminates.

Q.8

What is ready queue ?

Ans. The processes that are residing in

main memory and are ready and waiting to execute are kept on a list called the

ready queue.

Q.9

List out the data fields associated with process control blocks.

Ans. Data fields

associated with process control block is CPU registers, PC, process state,

memory management information, input-output status information etc.

Q.10

What are the properties of communication link ?

Ans. Properties of

communication link

1. Links are established automatically.

2. A link is associated with exactly one

pair of communicating processes.

3.

Between each pair there exists exactly one link.

4. The link may be unidirectional, but

is usually bidirectional.

Q.11

What is socket ?

Ans. A socket is

defined as an endpoint for communication.

Q.12

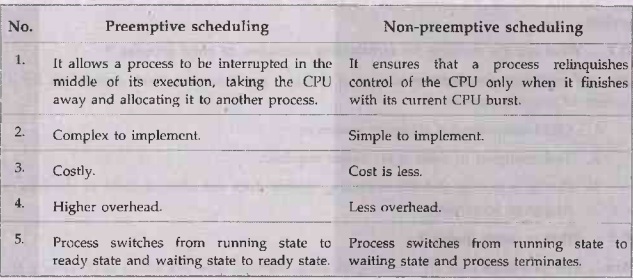

What is non-preemptive scheduling ?

Ans.Non-preemptive scheduling ensures that

a process relinquishes control of the CPU only when it finishes with its

current CPU burst.

Q.13 Differentiate

preemptive and non-preemptive scheduling.

Ans. :

Q.14 What

do you mean by short term scheduler ?

Ans.

Short term scheduler, also known as a dispatcher executes most frequently, and

makes the finest-grained decision of which process should execute next. This

scheduler is invoked whenever an event occurs.

Q.15

Which are the criteria used for CPU scheduling ?

Ans.Criteria

used for CPU scheduling are CPU utilization, throughput, turnaround time,

waiting time, response time.

Q.16 Explain

why two level scheduling is commonly used.

Ans. It provides the

hybrid solution to the problem of providing good system utilization and good

user service simultaneously.code

Q.17

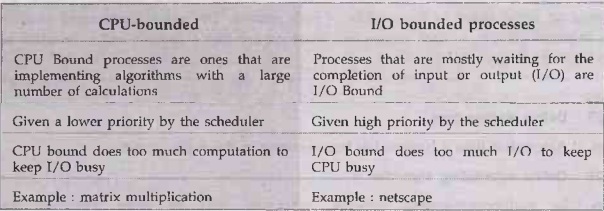

Why is it important for the scheduler to distinguish I/O-bound programs from

CPU-bound programs?

Ans. I/O-bound programs have the

property of performing only a small amount of computation before performing IO.

Such programs typically do not use up their entire CPU quantum. CPU-bound

programs, on the other hand, use their entire quantum without performing any

blocking IO operations. Consequently, one could make better use of the

computer's resources by giving higher priority to I/O-bound programs and allow

them to execute ahead of the CPU-bound programs.

Q.18

What is response time?

Ans. Response time is the amount of time it takes from when a request was, submitted until the first response is produced, not output.

Q.19 Define

waiting time.

Ans. Amount of time

a process has been waiting in the ready queue.

Q.20 Define

scheduling algorithm ?

Ans. In

multiprogramming systems, whenever two simultaneously in the ready state, a

choice has to be made which process to run next. The part of the OS that makes

the choice is called the scheduler and the algorithm it uses is called the

scheduling algorithm.

Q.21 Define

the term dispatch latency.

Time

it takes for the dispatcher to stop one process and start another running.

Q.22

What is preemptive priority method?

Ans. A preemptive priority will preempt

the CPU if the newly arrived process is higher than the priority of the

currently running process.

Q.23

What is medium term scheduling ?

Ans. Medium-term scheduling used

especially with time-sharing systems as an intermediate scheduling level. A

swapping scheme is implemented to remove partially run programs from memory and

reinstate them later to continue where they left off.

Q.24

What is preemptive scheduling ?

Ans. Preemptive scheduling can preempt a

process which is utilizing the CPU in between its execution and give the CPU to

another process.

Q.25

What is the difference between long-term scheduling and short-term scheduling ?

Ans. Long term scheduling adds jobs to

the ready queue from the job queue. Short term scheduling dispatches jobs from

the ready queue to the running state.

Q.26 List

out any four scheduling criteria.

Ans. Response time,

throughput, waiting time and turn around time.

Q.27

Define the term 'Dispatch latency'.

Ans. Dispatch latency: Time it takes for

the dispatcher to stop one process and start another running. It is the amount

of time required for the scheduler to stop one process and start another.

Q.28

Distinguish between CPU-bounded and I/O bounded processes.

Ans.

Q.29

Define priority inversion problem.

Ans. The higher priority process would

be waiting for the low priority one to finish. This situation is known as

priority inversion problem.

Q.30 What

advantage is there in having different time-quantum sizes on different levels

of a multilevel queuing system?

Ans.Processes that need more frequent

servicing, for instance, interactive processes such as editors, can be in a

queue with a small time quantum. Processes switch no need for frequent

servicing can be in a queue with a larger quantum, requiring fewer context

switches to complete the processing, and thus making more efficient use of the

computer.

Q.31

How does real-time scheduling differs from normal scheduling ?

Ans. Normal scheduling provides no

guarantee on when a critical process will be scheduled; it guarantees only that

the process will be given preference over non-critical processes. Real-time

systems have stricter requirements. A task must be serviced by its deadline;

service after the deadline has expired is the same as no service at all.

Q.32

What is Shortest-Remaining-Time-First (SRTF) ?

Ans. If a new process arrives with CPU burst length less than remaining time of process, current executing preempt. This scheme is known as the

Shortest-Remaining-Time-First.

Q.33

What is round robin CPU scheduling ?

Ans. Each process

gets a small unit of CPU time (time quantum). After this time has elapsed, the

process is preempted and added to the end of the ready queue.

Q.34 What

is meant by starvation in operating system?

Ans. Starvation is a resource management

problem where a process does not get the resources (CPU) it needs for a long

time because the resources are being allocated to other processes.

Q.35

What is an aging?

Ans. Aging is a technique to avoid

starvation in a scheduling system. It works by adding an aging factor to the

priority of each request. The aging factor must increase the requests priority

as time passes and must ensure that a request will eventually be the highest

priority request

Q.36

How to solve starvation problem in priority CPU scheduling ?

Ans.

Aging - as time progresses increase the priority of

the process, so eventually the process will become the highest priority and

will gain the CPU. i.e., the more time is spending a process in ready queue

waiting, its priority becomes higher and higher.

Q.37 What

is convoy effect?

Ans.

A convoy effect happens when a set of processes need

to use a resource for a short time, and one process holds the resource for a

long time, blocking all of the other processes. Essentially it causes poor

utilization of the other resources in the system.

Q.38

How can starvation / indefinite blocking of processes be avoided in priority

scheduling ?

Ans. A solution to the problem of

indefinite blockage of processes is aging. Aging is a technique of gradually

increasing the priority of processes that wait in the system for a long time.

Q.39"Priority inversion is a condition that occurs in real time systems where a low priority process is starved because higher priority processes have gained hold of the CPU" - Comment on this statement. AU CSE: May-17

Ans. A low priority thread always starts

on a shadow version of the shared resource, the original resource remains

unchanged. When a high-priority thread needs a resource engaged by a low

-priority thread, the low priority thread is preempted, the original resource

is restored and the high priority thread is allowed to use the original

Q.40

Provide two programming examples in which multithreading provides better.

performance than a single-threaded solution.

Ans.

A Web server that services each

request in a separate thread.

A

parallelized application such as matrix multiplication where different parts of

the matrix may be worked on in parallel.

An

interactive GUI program such as a debugger where a thread is used to monitor

user input, another thread represents the running application, and a show third

thread monitors performance.

Q.41

State what does a thread share with peer threads.

Ans. Thread share the memory and

resource of the process.

Q.42

Define a thread. State the major advantage of threads.

Ans. A thread is a flow of execution

through the process's code with its own program counter, system registers and

stack.

2. Efficient communication.

Advantages:

1. Minimize context switching time.

Q.43 Can a multithreaded solution using

multiple user-level threads achieve better performance on a multiprocessor

system than on a single processor system ?

Ans. A multithreaded system

comprising of multiple user-level threads cannot make use of the different

processors in a multiprocessor system simultaneously. The operating system sees

only a single process and will not schedule the different threads of the

process on separate processors. Consequently, there is no performance benefit

associated with executing multiple user-level threads on a multiprocessor

system.

Q.44

What are the differences between user-level threads and kernel-level threads ?

(Refer section 2.8.3)

Q.45 What

are the benefits of multithreads?

Ans. Benefits of multithreading is

responsiveness, resource sharing, economy and utilization of multiprocessor

architecture.

Q.46

Why a thread is called as light weight process ?

Ans. Thread is light weight taking

lesser resources than a process. It is called light weight process to emphasize

the fact that a thread is like a process but is more efficient and uses fewer

resources and they also share the address space.

Q.47 Name one situation where threaded

programming is normally used ?

Ans. Threaded

programming would be used when a program should satisfy multiple tasks at the

same time. A good example for this would be a program running with

GUI.

Q.48

Describe the actions taken by a thread library to context switch between

user-level threads.

Ans. Context switching between user

threads is quite similar to switching between Kernel threads, although it is

dependent on the threads library and how it maps user threads to kernel

threads. In general, context switching between user threads involves taking a

user thread of its LWP and replacing it with another thread. This act typically

involves saving and restoring the state of the registers.

Q.49

What is a thread pool ?

Ans. A thread pool

is a collection of worker threads that efficiently execute asynchronous

callbacks on behalf of the application. The thread pool is primarily used to

reduce the number of application threads and provide management of the worker

threads.

Q.50

What is deferred cancellation in thread ?

Ans. The target

thread periodically checks whether it should terminate, allowing it an

opportunity to terminate itself in an orderly fashion. With deferred

cancellation, one thread indicates that a target thread is to be cancelled, but

cancellation occurs only after the target thread has checked a flag to

determine if it should be cancelled or not. This allows a thread to check

whether it should be cancelled at a point when it can be cancelled safely.

Q.51 What

is Pthread ?

Ans. Pthreads refers to the POSIX standard defining an API for thread creation and synchronization. This is a specification for thread behavior, not an implementation. Operating system designers may implement the specification in any way they wish.

Q.52 What is

thread cancellation ?

Ans.Under normal circumstances, a thread terminates when

it exits normally, either by returning from its thread function or by calling

pthread_exit.However, it is possible for a thread to request that another

thread terminate. This is called canceling a thread.

Q.53 List

the benefit of multithreading.

Ans.

Benefit of multithreading:

It

takes less time to create thread than a new process.

It

takes less time to terminate thread than process.

Q.54

Under what circumstances is user level threads is better than the kernel level

threads?

Ans. User-level threads are much faster

to switch between, as there is no context switch; further, a

problem-domain-dependent algorithm can be used to schedule among them.

CPU-bound tasks with interdependent computations, or a task that will switch

among threads often, might best be handled by user-level threads

Q.55

What resources are required to create threads?

Ans. Thread is

smaller than a process, so thread creation typically uses fewer resources than

process creation.

Creating either a user or kernel thread

involves allocating a small data structure to hold a register set, stack, and

priority.

Q.56

Differentiate single threaded and multi-threaded processes.

Ans.Single-threading is the processing of one command at

a time. When one thread is paused, the system waits until this thread is

resumed. In Multithreaded processes, threads can be distributed over a series

of processors to scale. When one thread is paused due to some reason, other

threads run as normal.

Q.57

Give an programming example in which multitreading does not provide better

performance than single threaded solution.

Ans. Any kind of sequential program is not a good candidate to be threaded. An example of this is a program that calculates an individual tax return.

Another example is a

"shell" program such as the C-shell or Korn shell. Such a program

must closely monitor its own working space such as open files, environment

variables, and current working directory.

Q.58 Define

mutual exclusion.

Ans. If a collection of processes share

a resource or collection of resources, then often mutual exclusion is required

to prevent interference and ensure consistency when accessing the resources.

Q.59

How the lock variable can be used to introduce mutual exclusion?

Ans. We consider a

single, shared, (lock) variable, initially 0. When a process wants to enter in

its critical section, it first tests the lock. If lock is 0, the process first

sets it to 1 and then enters the critical section. If the lock is already 1,

the process just waits until (lock) variable becomes 0. Thus, a 0 means that no

process in its critical section, and 1 means hold your horses - some process is

in its critical section.

Q.60

What is the hardware feature provided in order to perform mutual exclusion

operation indivisibly ?

Ans. Hardware features can make any

programming task easier and improve system efficiency. It provide special

hardware instructions that allow user to test and modify the content of a word.

Q.61 Discuss

why implementing synchronization primitives by disabling interrupts is gnome

not appropriate in a single processor system if the synchronization primitives

be are to be used in user level programs.

Ans. If a user level program is given

the ability to disable interrupts, then it can disable the timer interrupt and

prevent context switching from taking place, thereby allowing it to use the

processor without letting other processes execute.

Q.62

What is race condition ?

Ans. A race

condition is a situation where two or more processes access shared data

concurrently and final value of shared data depends on timing.

Q.63

Define entry section and exit section.

Ans. Each process

must request permission to enter its critical section. The section of the code

implementing this request is the entry section. The critical section is

followed by an exit section. The remaining code is the remainder section.

Q.64

Elucidate mutex locks with its procedure.

Ans. Mutex lock is software tools to

solve the critical-section problem. A mutex lock has a boolean variable

available whose value indicates if the lock is available or not. If the lock is

available, a call to acquire() succeeds, and the lock is then considered

unavailable

Q.65

Name any two file system objects that are neither files nor directories and

what the advantage of doing so is.

Ans. Semaphore and monitors the system

objects. Advantage is to avoid critical section problem.

Q.66 What

is binary semaphore ?

Ans. Binary semaphore is a semaphore

with an integer value that can range only between 0 and 1.

Q.67

What is semaphore? Mention its importance in operating systems.

Ans. Semaphore is an integer variable.

It is a synchronization tool used to solve critical section problem. The

various hardware based solutions to the critical section problem are

complicated for application programmers to use.

Q.68

What is the meaning of the term busy waiting ?

Ans. Busy waiting means a process waits

by executing a tight loop to check the status/value of a variable.

Q.69 What

is bounding waiting ?

Ans. After a process made a request to

enter its critical section and before it is granted the permission to enter,

there exists a bound on the number of times that other processes are allowed to

enter.

Q.70

Why can't you use a test and set instruction in place of a binary semaphore ?

Ans.

A binary semaphore requires either a busy wait or a

blocking wait, semantics not provided directly in the Test and Set. The

advantage of a binary semaphore is that it does not require an arbitrary length

queue of processes waiting on the semaphore.

Q.71 What

is concept behind strong semaphore and spinlock ?

Ans.Semaphore can be implemented in user

applications and in the kernel. The process that has been blocked the longest

is released from the queue first is called a strong semaphore..

Using simple lock variable, process

synchronization problem is not solved. To avoid this, spinlock is used. A lock

that uses busy waiting is called a spin lock.

Q.72 What is bounded buffer problem ?

Ans. The bounded

buffer producers and consumers assume that there are fixed buffer sizes i.e. a

finite numbers of slots are available. To suspend the producers when the buffer

is full, to suspend the consumers when the buffer is empty, and to make sure

that only one process at a time manipulates a buffer so there are no race

conditions or lost updates.

Q.73

State the assumption behind the bounded buffer producer consumer problem.

Ans. Assumption: It

is assume that the pool consists of 'n' buffers, each capable of holding one

item. The mutex semaphore provides mutual exclusion for accesses to the buffer

pool and is initialized to the value 1.

Q.74 What

is a critical section and what requirements must a solution to the critical

section problem satisfy ?

Ans. Consider a system consisting of several

processes, each having a segment of code called a critical section, in which

the process may be changing common variables, updating tables, etc. The

important feature of the system is that when one process is executing its

critical section, no other process is to be allowed to execute its critical

section. Execution of the critical section is mutually exclusive in time.

A solution to the critical section

problem must satisfy the following three requirements: 1. Mutual exclusion 2.

Progress 3. Bounded waiting

Q.75 Define 'monitor'. What does it consist of? AU: CSE/IT: Dec.-11

Ans. Monitor is a highly structured programming language construct. It consists of private variables and private procedures that can only be used within a monitor.

Q.76

Explain the use of monitors.

Ans. Use of monitors:

a) It provides a mutual exclusion

facility. b) A monitor support synchronization by the use of condition

variables. c) Shared data structure can be protected by placing it in a

monitor.

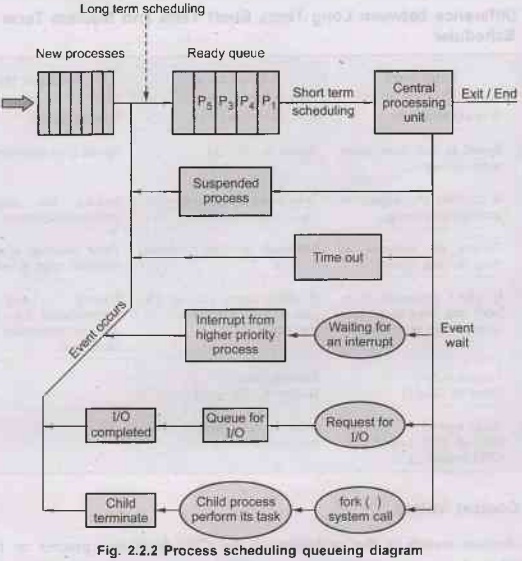

Q.77 Give the queueing diagram representation of process schedulling.

Ans. Refer

Fig. 2.2.2.

Q.78

What are kernal threads. AU:

May-22

Introduction to Operating Systems: Unit II(a): Process Management : Tag: : Process Management - Introduction to Operating Systems - Two marks Questions with Answers

Related Topics

Related Subjects

Introduction to Operating Systems

CS3451 4th Semester CSE Dept | 2021 Regulation | 4th Semester CSE Dept 2021 Regulation