Introduction to Operating Systems: Unit II(a): Process Management

Threads

Process Management - Introduction to Operating Systems

Thread is a dispatchable unit of work. It consists of thread ID, program counter, stack and register set. Thread is also called a Light Weight Process (LWP).

Threads

•

Thread is a dispatchable unit of work. It consists of thread ID, program

counter, stack and register set. Thread is also called a Light Weight Process

(LWP). Because they take fewer resources then a process. A thread is easy to

create and destroy.

•

It shares many attributes of a process. Threads are scheduled on a processor.

Each thread can execute a set of instructions independent of other threads and

processes.

• Every

program has at least one thread. Programs without multithreading executes

sequentially. That is, after executing one instruction the next instruction in

sequence is executed.

• Thread is a basic processing unit to

which an operating system allocates processor time and more than one thread can

be executing code inside a process.

• When user double click on an icon by

using mouse, the' operating system creates a process and that process has one

thread that runs the icon's code.

• Each

thread belongs to exactly one process and no thread can exist outside a

process. Each thread represents a separate flow of control.

•

Normally, an operating system will have a separate thread for each different

activity. The OS will have a separate thread for each process and that thread

will perform OS activities on behalf of the process. In this condition, each

user process is backed by a kernel thread. When process issues a system call to

read a file, the process's thread will take over, find out which disk accesses

to generate, and issue the low level instructions required to start the

transfer. It then suspends until the disk finishes reading in the data.

• When process starts up a remote TCP

connection, its thread handles the low-level details of sending out network

packets.

• In

Remote Procedure Call (RPC), servers are multithreaded. Server receives the

messages using a separate thread.

• Different operating system uses threads

in different ways.

1. Free memory is managed by kernel

thread in LINUX OS.

2. Solaries uses a kernel thread for

interrupt handling.

Thread Advantages

1. Context switching time is minimized.

2. Thread support for efficient

communication.

3. Resource sharing is possible using

threads.

4. A thread provides concurrency within

a process.

5.

It is more economical to create and context switch threads.

Difference between Thread and Process

Thread Lifecycle

2. Ready: Thread's start() method

invoked and now it is executing. OS put thread into Ready queue.

3. Running: Highest priority

ready thread enters the running state. Thread is assigned a processor and now

is running.

4.

Blocked:This is the state when a thread is waiting for a lock to access

an org object. il

5.

Waiting: Here thread is waiting indefinitely for another thread to

perform an action.

6. Sleeping: Thread

sleep for a specified time of period. When sleeping time expires, it enters to

ready state. CPU is not used by sleeping thread.

7.

Dead: When thread completes task or operation.

Thread Programming and Libraries

• The subroutines which comprise the

Pthreads API can be informally grouped into four major groups:

1.

Thread management: Routines that work directly on threads - creating,

detaching, joining, etc. They also include functions to set/query thread

attributes (joinable, scheduling etc.)

2. Mutexes: Routines that deal

with synchronization, called a "mutex", which is an abbreviation for

"mutual exclusion". Mutex functions provide for creating, destroying,

locking and unlocking mutexes.

3. Condition variables:

Routines that address communications between threads that share a mutex. Based

upon programmer specified conditions. This group includes functions to create,

destroy, wait and signal based upon specified variable values. Functions to

set/query condition variable attributes are also included.

4.

Synchronization: Routines that

manage read/write locks and barriers.

Basic thread functions include creation

and termination of threads. There are five such thread function.

pthread_create Function

• A program is started by exec, a single

thread is created known as initial thread or main thread. Additional threads

are created by pthread_create

#include <pthread.h>

int pthread_create (pthread_t *tid,

const pthread_attr_t *attr,

void *(*func) (void*), void* arg);

• Each thread is identified by a thread

ID, of which data type is pthread_t. Each thread has numerous attributes, its

priority, its initial stack size. The thread starts by calling this function

and then terminates explicitely or implicitly. The address of function is

specified as func argument which is called with a single pointer argument, arg.

pthread_join Function

• A thread can be terminated by calling

pthread_join. In Unix process pthread_create is similar to fork and

pthread_join is similar to wait pid.

#include<pthread.h>

int pthread_ join (pthread_ttid, void

**status);

The

tid is specified as the wait. But there is no way to wait for any of the

threads.

pthread_self Function

• Each thread

has an ID which identifies it within a given process. This thread ID is

returned by pthread_create and is used by pthread_join.

• A thread fetches this value for itself

by using ptheread_self.

#include <pthread.h>

pthread_tpthread_self

(void);

pthread_detach Function

• A thread is joinable or detachable.

When joinable thread terminates, its thread ID and exit status are retained

until another thread calls pthread_join.

• A detached thread is like a daemon

process i.e. when it is terminated all its resources are released. The

pthread_detach function changes the specified thread so that it is detached.

#include<pthread.h>

int

pthread_detach (pthread_ttid);

pthread_exit Function

A

way for a thread to terminate is to call pthread_exit.

#include <pthread.h>

void

pthread_exit (void *status);

• But if thread is not detached, its thread ID and exit status are retained for a later pthread_join by any other thread in calling processes.

pthread_sigmask, pthread_kill

The

prototype of the pthread_sigmask and pthread_kill functions are:

#include <signal.h>

#include

<pthread.h>

int

pthread_sigmask (int mode, sigset_t *sigsetp, sigset_t *oldstp);

int

pthread_kill (pthread_ttid, int signum);

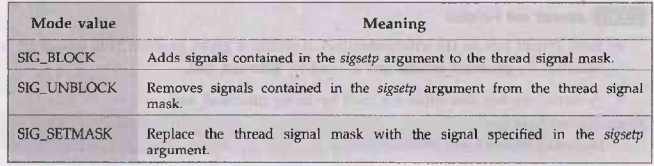

The pthread_sigmask function sets the signal mask of a calling thread. The sigsetpargument contains one or more of the signal numbers to be applied to the calling thread.

•

The mode argument specifies how the signal specified in the sigsetp argument is

to boxile be used. Possible value of mode argument is declared in

<signal.h> header and their meanings are:

• The pthread_kill function sends a

signal, as specified in the signum argument, to aafbesthread whose ID is given

by the tid argument. The sending and receiving threads must be in the same

process.

• If the default action for a signal is

to terminate the process, then sending the bas signal to a thread will still

kill the entire process.

sched_yield

The function prototype of the

sched_yield API is :

#include

<pthread.h>

int sched yield (void);

•

The sched_yield function is called by a thread to yield its execution to other

threads with the same priority. This function returns 0 on success and -1 when

fails.

Thread Scheduling

• An application can be implemented as a

set of threads, which cooperate and execute concurrently in the same address

space. Four general approach for multiprocessor thread scheduling are as

follows:

1.

Load sharing

2.

Gang scheduling

3.

Dedicated processor scheduling

4. Dynamic scheduling

1. Load sharing

• Threads are not assigned to a particular processor. When a processor is idle, it selects a thread from a global queue serving all processors.

b. In this method, load is evenly distributed among processors. No centralized scheduler is required.

• This

policy is implemented in different ways: FCFS and priority method is used.

These are depends upon queue structure.

Three

different versions of load sharing :

a.First-come-first-served (FCFS): On arrival of the thread, it is

placed at the end of the shared queue. Next ready thread is selected when

processor is idle. Processor executes the thread until its completion or

blocking.

b.

Smallest number of threads first: Shared ready queue is organized according to the priority.

Processor selects highest priority thread with the smallest number of

unscheduled threads. If the priority of the two thread is same, then FCFS

method is applied.

C.Preemptive smallest number of

threads first:

Highest priority is given to jobs with the smallest number of unscheduled

threads.

Advantages

of Load sharing

1.

No centralized scheduler is required.

2. Load distribution is equal.

3. Global queue is maintained by the

system.

Disadvantages

of Load sharing

1. It enforces mutual exclusion because of memory.

2.

High degree of coordination is required between the threads of a program.

3.

Caching becomes less efficient.

2.

Gang scheduling

• With gang scheduling, a set of related

threads is scheduled to run on a set of processors at the same time, on a

one-to-one basis.

• Synchronization

blocking may be reduced or increased.

•

If closely related processes executes in parallel, synchronization blocking may

be reduced.

• Set of related threads is scheduled to

run on a set of processors.

•

Gang scheduling has three parts.

1.

Group of related threads are scheduled as a unit, a gang.

2. All members of a gang run

simultaneously on different time shared CPUs.

3. All gang members start and end their

time slices together.

• The trick that makes gang scheduling

work is that all CPU are scheduled synchronously. This means that time is

divided into discrete quanta.

• An

example of how gang scheduling works is given in the Fig. 2.7.1. Here we have a

multiprocessor with six CPU being used by five processes, A through E, with a

total of 24 ready threads.

•

During time slot 0, threads Ao through A5 are scheduled and run.

To cops

•

During time slot 1, threads Bo, B1, B2, Co, C1, C2 are scheduled and run.

• During

time slot 2, D's five threads and Eo get to run.

• The

remaining six threads belonging to process E run in the time slot 3. Then the

cycle repeats, with slot 4 being the same as slot 0 and so on.

• Gang scheduling is useful for applications where performance severly degrades to toe when any part of the application is not running.

3.

Dedicated processor assignment

• When

application is scheduled, its threads are assigned to a processor.

• Some

processor may be idle and no multiprogramming of processors.

• Provides

implicit scheduling defined by assignment of threads to processors. For the

duration of program executation, each program is allocated a set of processors

equal in number to the number of threads in the program. Processors are chosen

from the available pool.

4.

Dynamic scheduling

• Number of threads in a process are

altered dynamically by the application.

• Operating

system and the application are involved in making scheduling decisions. The OS

is responsible for partitioning the processors among the jobs.

Operating system adjusts load to improve

the use

1. Assign idle processors.

lave

of Process Management

2.

New arrivals may be assigned to a processor that is used by a job currently

using more than one processor.

3.

Hold request until processor is available.

Introduction to Operating Systems: Unit II(a): Process Management : Tag: : Process Management - Introduction to Operating Systems - Threads

Related Topics

Related Subjects

Introduction to Operating Systems

CS3451 4th Semester CSE Dept | 2021 Regulation | 4th Semester CSE Dept 2021 Regulation