Introduction to Operating Systems: Unit II(a): Process Management

Inter-Process Communication

Process Management - Introduction to Operating Systems

Exchange of data between two or more separate, independent processes/threads is possible using IPC. Operating systems provide facilities/resources for Inter-Process Communications (IPC), such as message queues, semaphores, and shared memory.

Inter-process Communication

• Exchange of data between two or more separate, independent processes/threads is possible using IPC. Operating systems provide facilities/resources for Inter-Process Communications (IPC), such as message queues, semaphores, and shared memory.

• A complex programming environment often uses multiple

cooperating processes to perform related operations. These processes must

communicate with each other and share resources and information. The Kernel

must provide mechanisms that make this possible. These mechanisms are

collectively referred to as interprocess communication.

• Distributed computing systems make use

of these facilities/resources to provide Application Programming Interface

(API) which allows IPC to be programmed at a higher level of abstraction.

(e.g., send and receive).

• Five types of inter-process

communication are as follows:

1.

Shared memory permits processes to communicate by simply reading and writing to

a specified memory location.

2. Mapped memory is similar to shared

memory, except that it is associated with a file in the file system.

3. Pipes permit sequential communication

from one process to a related process.

4. FIFOs are similar to pipes, except

that unrelated processes can communicate because the pipe is given a name in

the file system.

5. Sockets support communication between

unrelated processes even on different computers.

•

Purposes of IPC

1.

Data transfer: One process

may wish to send data to another process.

2. Sharing data:

Multiple processes may wish to operate on shared data, such that if a process

modifies the data, that change will be immediately visible to other processes

sharing it.

3.

Event modification: A process may

wish to notify another process or set of processes that some event has

occurred.

4.

Resource sharing: The Kernel

provides default semantics

for resource allocation; they are not suitable for all application.

5.

Process control :

A process such as debugger may wish to assume complete control over the

execution of another

process.

• IPC has two forms: IPC on same host and IPC on

different hosts

IPC

is used for 2 functions:

1.

Synchronization: Used to

coordinate access to resources among processes and also to coordinate the

execution of these processes. They are record locking, semaphores, mutexes and

condition variables.

2. Message passing:

Used when processes wish to exchange information. Message passing takes several

forms such as: Pipes, FIFOs, message queues and shared memory.

Pipes

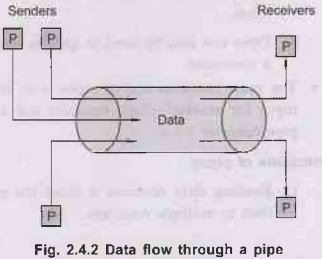

• A

pipe is a unidirectional, first-in first-out, unstructured data stream of fixed

maximum size. Writers add data to the end of the pipe; readers retrieve data

from the front of the pipe.

• Once read, the data is removed from

the pipe and is unavailable to other readers. A pipe provides a simple flow

control mechanism.

•

A process attempting to read from empty pipe blocks until more data is

written to the pipe. A process trying to write to a full pipe lock until another

process reads data from pipe.

•

The pipe system call creates a pipe and returns two file descriptors: one for

reading and one for writing. These descriptors are inherited by child

processes, which thus share access to the file.

• Each pipe can have several readers and

writers. A given process may be a reader or writer or both. Fig. 2.4.2 shows

data flow through a pipe.

•

Pipes can be used only between processes that have a common ancestor. Normally,

a pipe is created by a process, that process calls fork and the pipe is used

between the parent and the child.

•

Example to show how to create and use a pipe :

main()

{

int pipefd[2], n;

char buff[100];

if (pipe(pipefd) < 0)

err_sys("pipe error");

printf("read fd = %d, write fd =

%d\n", pipefd[0], pipefd[1]);

if (write(pipefd[1], "hello world\n",

12) != 12)

err_sys("write error");

if ( (n=read(pipefd[0], buff,

sizeof(buff))) <=0) err_sys("read error");

write (1, buff, n); /*fd=1= stdout*/

}

Properties

of pipe :

1. Pipes do not have a name. For this

reason, the processes must share a parent process. This is the main drawback to

pipes. However, pipes are treated as file descriptors, so the pipes remain open

even after fork and exec.

2.

Pipes do not distinguish between messages; they just read a fixed number

of bytes.

3. Pipes can also be used to get the

output of a command or to provide input to a command

•

The most common use of pipes is to let the output of one program become the

input for another. Users typically join two programs by a pipe using the

shell's pipe operator (1).

Limitations

of pipes:

1. Reading data removes it from the

pipe, a pipe cannot be used to broadcast data to multiple receivers.

2. Data in a pipe is treated as a byte

stream and has no knowledge of message boundaries.

3. If there are multiple readers on a

pipe, a writer cannot direct data to a specific reader.

•

Program for sending data from parent process to child process over a pipe

#include <stdio.h>

int main(void)

{

int

n; int fd[2]; pid_tpid;

char

line[MAXLINE];

if (pipe(fd)<0) err_sys("pipe error");

if

((pid = fork()) < 0)

{

err_sys("fork error");

}

else if (pid> 0)

{ /* parent */ close(fd[0]);

write(fd[1],

"hello world\n", 12);

}

else { /* child */

close(fd[1]);

n = read(fd[0], line, MAXLINE);

write(STDOUT_FILENO,

line, n);

}

exit(0);

}

•

Pipe is created by calling the pipe function.

#include <unistd.h>

int

pipe (int filedes[2]);

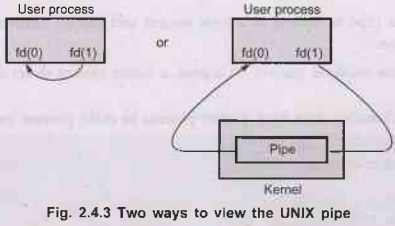

• Two file descriptor are returned

through the filedes argument :filedes[0] is open for reading and filedes[1] is

open for writing. The output of filedes[1] is the input for filedes[0].

•

Fig. 2.4.3 shows two ways to view the UNIX pipe.

• The fstat function returns a file type

of FIFO for the file descriptor of either end of a pipe. A pipe in a single

process is not useful.

• Normally, the process that calls pipe

then calls fork, creating an IPC channel from the parent to the child or vice

versa.

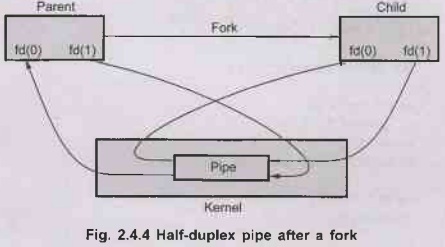

•

Fig. 2.4.4 shows half-duplex pipe after a fork.

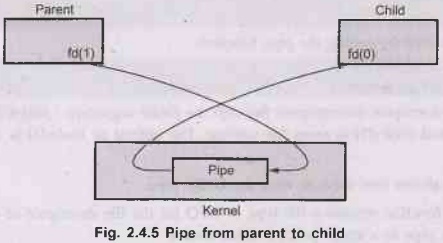

•

Fig. 2.4.5 shows pipe from parent to child. For a pipe from the parent to the

child, the parent closes the read end of the pipe (fd[0]), and the child closes

the write end (fd[1]).

• For a pipe from the child to the

parent, the parent closes fd[1], and the child closes fd[0]. When one end of a

pipe is closed, the following two rules apply.

1.

If we read from a pipe whose write end has been closed, read returns 0 to

indicate an end of file after all the data has been read.

2.

If we write to a pipe whose read end has been closed, the signal SIGPIPE is

generated. If we either ignore the signal or catch it and return from the signal handler, write returns 1 with errno set to EPIPE.

Features of Message Passing

1. Simplicity :

Message passing system should be simple and easy to use. It should be possible

to communicate with old and new applications.

2.

Uniform semantics: Message

passing is used for two types of IPC.

a.

Local communication: Communicating

processes are on the same node.

b.

Remote communication: Communicating

processes are on the different nodes.

3. Efficiency : IPC

become so expansive if message passing system is not effective. Users try

avoiding to IPC for their applications. Message passing system will become more

efficient if we try to avoid more message exchanges during communication

process. For examples :

a. Avoiding the costs of establishing

and terminating connection.

b. Minimizing the costs of maintaining

the connections.

c. Piggybacking of acknowledgement.

4.

Reliability:

Distributed systems are prone to different catastrophic events such as

node crashes or physical link failures. Loss of message because of

communication link fails. To handle the loss messages, we required

acknowledgement and retransmission policy. Duplicate message is one of the

major problems. This happens because of timeouts or events of failures.

5. Correctness:

Correctness is a feature related to IPC protocols for group communication.

Issues related to correctness are as follows:

i.

Atomicity : Every

message sent to a group of receivers will be delivered to either all of them or

none of them.

ii. Ordered delivery: Messages arrive to all receivers in an order acceptable to the application.

iii. Survivability:

Messages will be correctly delivered despite partial failures of processes,

machines, or communication links.

6. Security:

Message passing system must provide a secure end to end communication.

7.

Portability: Message

passing system should itself be portable.

IPC Message Format

• Message passing system requires the

synchronization and communication between the two processes. Message passing

used as a method of communication in microkernels. Message passing systems come

in many forms. Messages sent by a process can be either fixed or variable size.

The actual function of message passing is normally provided in the form of a

pair of primitives.

a) Send (destination_name, message)

b)

Receive (source_name, message).

•

Send primitive is used for sending a message to destination. Process sends

information in the form of a message to another process designated by a in

destination. A process receives information by executing the receive primitive,

which indicates the source of the sending process and the message.

• Design characteristics of message

system for IPC.

1. Synchronization between the process 2. Addressing

3. Format of the message 4. Queueing

discipline

Issues

in IPC by Message Passing

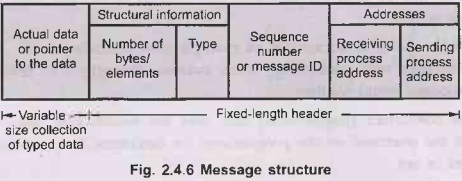

• Message is a block of information.

•

A message is a meaningful formatted block of information sent by the sender be

process to the receiver process.

•

The message block consists of a fixed length header followed by a variable

size collection of typed data objects.

•

The header block of a message may have the following elements :

1.

Address: A set of

characters that uniquely identify both the sender and receiver.

2.

Sequence number:

It is the message identifier to identify duplicate and lost messages in case of

system failures.

3.

Structural information: It has

two parts. The type part that specifies whether the data to be sent to

the receiver is included within the message or the message only contains a

pointer to the data. The second part specifies length of the variable-size message.

• Fig. 2.4.6 shows the typical message format.

•

Some important issues to be considered for the design of an IPC protocol based

message passing system :

i. The sender's identity

ii.

The receiver's identity

iii.

Number of receivers

iv. Guaranteed acceptance of sent

messages by the receiver

v. Acknowledgment by the sender

vi. Handling system crashes or link

failures

vii.

Handling of buffers

viii. Order of delivery of messages

IPC Synchronization

•

Send operation can be synchronous or asynchronous. Receive operation can be

blocking or nonblocking.

•

Sender and receiver process can be blocking mode or nonblocking mode. Different

possibility of sender and receivers are as follows:

1. Blocking send, blocking receive

2. Nonblocking send, blocking

receive

3.

Nonblocking send, Nonblocking receive

Blocking

send, blocking receive

• Blocking send must wait for the

receiver to receive the message. Synchronous communication is an example of

blocking send. Both processes (sender and receiver) are blocked until the

message is delivered.

Rendezvous:

sending a message to another process leaving the

sender process suspended until the message is received and processed.

Nonblocking

send, blocking receive

• Sender is free to send the messages

but receiver is blocked until the requested message arrives.

• Asynchronous communication is an

example of nonblocking send. Asynchronous communication with nonblocking sends

increases throughput by reducing the time that processes spend waiting.

•

Natural concurrent programming task uses the nonblocking send. Nonblocking send

is the overhead on the programmer for determine that a message has been

received or not.

Shared Memory

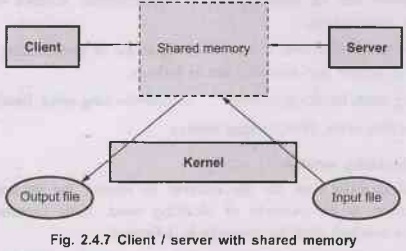

• A region of memory that is shared by

co-operating processes is established. Processes can then exchange information

by reading and writing data to the shared region.

• Shared memory allows maximum speed and

convenience of communication, as it can be done at memory speeds when within a

computer. Shared memory is faster than message passing, as message-passing

systems are typically implemented using system calls and thus require the more

time-consuming task of Kernel intervention.

•

In contrast, in shared-memory systems, system calls are required only to establish

shared-memory regions. Once shared memory is established, all accesses are

treated as routine memory accesses, and no assistance from the Kernel is

required. • Fig. 2.4.7 shows client/server with shared memory.

Advantages:

1. Good for sharing large amount of data.

2. Very fast.

Limitations

1.

No synchronization provided - applications must create their own.

2. Alternative to shared memory is mmap

system call, which maps file into the address space of the caller.

Introduction to Operating Systems: Unit II(a): Process Management : Tag: : Process Management - Introduction to Operating Systems - Inter-Process Communication

Related Topics

Related Subjects

Introduction to Operating Systems

CS3451 4th Semester CSE Dept | 2021 Regulation | 4th Semester CSE Dept 2021 Regulation